I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

Decision Support System for Real-Time People Counting in a Crowded Environment Tanweer Alam1 and Mohammed Aljohani2 (Corresponding author: Tanweer Alam)

Computer Science Department & Islamic University of Madinah1 Information System Department & Islamic University of Madinah2 170, Prince Naif bin Abdulaziz Road, Madinah, Saudi Arabia (Email:corresponding

[email protected]) (Received Jan. 15, 2020; revised and accepted Feb 5, 2020)

Abstract In a crowded environment, people counting decision support system is a very useful system for counting the number of people. Measuring persons are very difficult due to the limited identification of body parts due to overlapping. The authors are proposed a decision support system that provides reliable, real-time performance for the counting of automated people in crowded areas. A solution that suggested is a method of counting by detection based on a system of decision support. A decision-support system is designed to be used in surveillance cameras application scenarios as an autonomous appliance for crowd analysis. Keywords: Counting; Crowd Management; Decision Support System; Real-Time

1

Introduction

Almost all of the counting techniques rely on individuals being identified to count their amount. When it is required in real-time and when the crowd is dense, counting becomes inefficient (see Figure 1). In terms of speed, complexity and accuracy each algorithm has its own advantages and disadvantages. Throughout the numerous situations, it is important that the number of people in a crowded environment can be estimated in real-time. Monitoring the crowd environment and trying to detect the cognition roots anytime anywhere using the Quad Copter technique [1]. In order to enforce the capacity limit in a city, actively manage city services and allocate resources for public events, assist with crowd control during public events, and monitor emergency or suspicious incidents in the crowded environment, a real-time count can be used. There are no validated methods currently available for estimating the size of a large crowd. The watermarked images and the recovered images generated in the article [22]. The smartest thing to do is just take photographs of the crowds or have a lot of people stand at various checkpoints and count how many people are passing by. Outdoor settings are the first solution that is possible. Because of restricted distance, occlusions, etc. that can be difficult to get exact automated counts from aerial photos. However, this count is only accurate when the photos were taken in time for the case. The Crowd analysis using computer vision techniques is presented in

Electronic copy available at: https://ssrn.com/abstract=3639076

34

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

Figure 1: Crowd in Al Haram Makkah

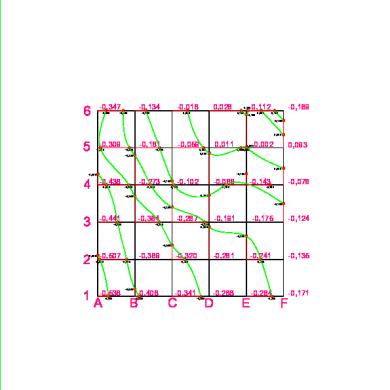

the article [24]. Throughout the second approach, the different counts can be combined to estimate the total number of people who were part of the crowd throughout the count duration but not at any particular moment. Persons at a busy checkpoint can also have a hard time estimating the number. Each of these approaches is intensive, and neither is capable of producing results in real-time [2, 12]. The crowd counting is a complicated issue because so many occlusions exist. With even solid previous hypotheses and no numerical limitations, it is often impossible to count the crowd from a single perspective. Use multiple sensors in a sensor network is one potential solution to this problem. The system can use a network of many image sensors, instead of having people at checkpoints [13]. Depending on the geometry, the sensor network can form clusters, so that each cluster can count the number of people at a local control point (see Figure 2). These clusters can communicate with each other to determine the major crowd count in the area that is enclosed by all the checkpoints [16]. Each sensor node has limited processing so that the images can only be interpreted in a logical way and the network has limited bandwidth, so the information must be compiled effectively [6]. In addition, the algorithms must be sufficiently lightweight to run in real-time. In this paper, we describe an approach to counting crowds with restricted computation and bandwidth in this sensor network environment [7]. The tracking solution clusters consisted of 6 interconnected cameras that provide realtime counting of people in a 148 sq-ft zone. Smart devices are scalable and reliable, so we can merge most of these clusters into a much larger sensors network to count over a much larger region. Typically, counting a group of objects, each object must be placed first. Locating multiple objects in a scene, however, is a demanding task because objects often look-alike or occlude one another, making it difficult to associate data. Some objects may be completely hidden from all views in crowded environments, and thus difficult to track separately. The methodology is based on computing limits on the number of objects in a region and not on monitoring individual objects, in order to avoid these

Electronic copy available at: https://ssrn.com/abstract=3639076

35

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

Figure 2: DSS based crowd management system

pitfalls. The crucial difference enables our system to work well even in crowded environments [4]. Every sensor in the device extracts foreground images from the context and transmits the resulting bitmaps over the networking system [5]. The processor and network bandwidth required for this are both limited. Information collected from all sensors is used in real-time for calculating a planar projection of the visual hull of the scenes. Such projection is used to connect the numbers of people and possible places, a non-trivial task considering that parts of the visual hull will simply be blank. A program monitors area is assumed that are supposed to be occupied. The methodology is not based on specific identification of people in the images, so there is no pair matching across frames between individuals [15]. Therefore, with the number of sensors and the number of people, our computational requirements scale well. The resulting system is robust to individual sensor failures, as well. This article is structured as follows: The background work is defined in Section 2. The techniques developed for our sensor network are discussed in Section 3. In crowded conditions, we did simulated and realistic experiments to test our method. Such results are set out in Section 4. The architecture of the device is defined here too. Finally, we give concluding remarks in Section 5 and outline future work.

2

Related Works

Tracking a variety of multi-camera systems address the problem of tracking objects through multiple perspectives. Most are utilizing stereo techniques to combine information from various sensors. A single-camera, for example, is used to identify the men, while stereo cameras are used in several types. Furthermore, all pairs of uni-directional sensors are implemented in the real-time system proposed in a stereo-like algorithm [23]. While multi-baseline stereo may be used to aggregate the data from more than two pairs of cameras, this method’s applicability is limited due to its computing value [10]. Certain strategies rely on using a model of shape or color to differentiate various objects for each vision. In an example, specific objects are identified using both color and shape, and their positions are defined by matching the cameras in groups. Deterministic simulations could be used to decide whether two items

Electronic copy available at: https://ssrn.com/abstract=3639076

36

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

were the same, seen in different views. Moreover, color-coordinated objects across pairs of views due to a large number of possible matches can be computationally intensive [20]. Tracking appears to be seen as a harder task than counting [11]. When we are able to monitor people in a scenario then we know how many people there will be. Another example of counting is using non-overlapping sensors mounted along a route and counting the number of items moving by. Models of motion can be used to measure object correspondences through views [3]. This method also relies on monitoring individual objects as they pass between sensors, and it does not scale as the number of objects is high as in a gathering. The crowd counting becomes most relevant when tracking all of the people in a scenario becomes impossible [18]. The condition usually occurs in crowded scenes in which individuals are often left out of all perspectives.

3

Methodologies

We assume that our sensor network is composed of basic building blocks [14]. Traditional CMOS manufacturing techniques enable the etching of groups of logic gates next to each pixel, without innocently increasing the sensor cost. However, this additional power is only useful for computations that involve adjacent elements to each pixel on CMOS. Complex operations such as object detection or separation, therefore, require the use of a dedicated computer and are therefore not permitted in the design [17]. Its bandwidth of the sensor network is limited too [28]. Transmitting images from all sensors to a powerful central computer is required in order to compute pair matching between all sensors in the system [27]. We can presume images only to compared neighboring sensors at the most. Although that presumption in practice is causing complications. Therefore, our simplistic sensors do not have an object model, and only deduct context at the local scale. Neither identification nor isolation of objects is achieved for single viewpoints [21]. By integrating data from all the sensors to calculate the ground plane representation of the 3D visual hull, we avoid doing pair-wise correspondences [19]. This approximation can be used to count objects which are forced to travel in two dimensions [8]. With more information on sensor networks and their constraints in architecture [9]. A visual hull is the intersection of all cones swept out from all camera views by the geometric shapes of objects seen from. That’s the largest volume in which objects will live which is compatible with all the details about the illustration [25]. Due to mathematical reliability concerns, the exact computation of the geometrical visual hull is challenging. In practice, rapprochements are preferred. The voxel depiction is often used but for our real-time system, this approach is too expensive. Certain depictions for technologies such as image-based rendering have also been developed. Only a planar projection of the visual hull is required throughout this article, thus avoiding the cost of full 3D representations of the visual hull. The prediction is approximately a top view of the picture. Objects in a closed-world environment are recorded in an overhead camera. Moreover, their technique specifically represents objects and requires knowledge of the exact number of objects, which may not be available, particularly for crowded situations.

4

Simulated and Realistic Experiments

The Decision support system is a computer-based information system that facilitates the decisionmaking process for operations of public or private organizations. DSS facilitates processes, services, and organizing. Generally, DSS lets organization higher managers imagine their artificial intelligence viewpoint decisions. DSS can also easily solve unstructured and semi-structured decision-making problems [26]. The support which is given by DSS can be categorized into three specific decisions support

Electronic copy available at: https://ssrn.com/abstract=3639076

37

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

organizational support, group support and personal support. In addition, DSS can be classified into four parts1) Inputs which contain numbers, factors and characteristic. 2) User expertise and knowledge manual analysis are needed from user inputs. 3) Decision processed by DSS depends on user knowledge. 4) The output should contain processed data from which DSS decisions are developed. Decision support systems can be computerized and combined with the human decision. we propose using a decision support system for traffic management which offers extreme capabilities that can possibly be used to scale down road traffic congestion, boost response time to incidents and ensure better traffic flow. A huge number of factors should be taken seriously to determine the current traffic flow on the whole city and to be required in the decision-making process. These input data or factors are usually processed by the on-line managing system using sensors network detectors. The decision support system makes the decision based on the traffic flow model, which includes many factors such as traffic densities, road capacity, average speed, etc. There are many ways to control traffic relying upon the nature traffic problems. We proposed using DSS because this issue should be solved by analyzing whole pictures in real-time response to reach the optimal solution. The algorithm for real-time counting is as follows. 1) Capture video from video camera. 2) Set Image into the frame. 3) Check frame. if (frame != null) then detect faces in the current frame and print total detected faces otherwise stop the program. The elementary algorithm framework of counting is as continues to follow: 1) Every sensor gets new observations. Calculate each image on the planar projection. 2) Calculate all projections to intersection. The resultant polygons are stored in crowd management database. 3) Delete from database the little polygons and phantoms. 4) Upgrade continuously through DSS system. 5) Report the new limits regarding the number of peoples in the area. 6) Repeat until all crowd managed. Such tests are designed to test the exactness of the counting method. The authors had several people who are coming in, moving around and leaving the area. This shows two runs, each with 10 and 15 persons. It’s lower bound is calculated, and the real count. An upper limit had been very high and is not being graphed. A lower bound matches in very well with the actual count. While previously explained, given the nature of the lower bound limit, the lower bound is stronger than the upper bound. Therefore, the upper bound constraint here is even weaker because the actual size of a person is uncertain and different for each human. It is important to use the smallest minimum object size, making the upper bound even larger. This also weakens the boundaries for polygons near the edge allowing people to enter and exit. Obtain a better lower bound for such edge polygons, if a polygon passes to the edge within the work area, the

Electronic copy available at: https://ssrn.com/abstract=3639076

38

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

lower bound is not set to zero instantly (since people might have tried to exit) as is needed to ensure rightness. Alternatively, once the polygon vanishes from the edge, the lower bound is set to zero. A difference would be that the lower bound is closer as people walk along the bottom, however when people leave there will be a gap in the lower bound before it catches up to the actual counting.

5

Conclusion

The authors developed a system that utilizes a network of basic image sensors to count people in a crowded environment. They introduced a method that uses shapes measured by each sensor through background subtraction to calculate the limit on the amount and possible locations of people. This system does not need initialization and is running in real-time tracking. This system does not measure correspondence between views of every function. Hence the expense of measuring increases linearly with the number of cameras. This outcome seems to be a robust and fault-tolerant process. A consequence of camera failure is a small increase in the value of the predicted visual hull due to the intersection of one less model. A tracking solution utilizes centralized interaction, which can be efficient for a small local sensor cluster. Next is the introduction of a decentralized communication system that is appropriate for a much wider sensor infrastructure. It would help reduce network-wide traffic and enhance reliability and usability. Throughout the decentralized approach, local cluster heads would be chosen to collect the information, rather than the central cluster. In fact, instead of sending the foreground bitmap, the sensors can send the 2D projection just as easily. The prediction will be a 148 X 148 bitmap for the configuration in this approach.

Acknowledgments The authors gratefully acknowledge the anonymous reviewers for their valuable comments.

References [1] D. S. Abdul Minaam, H. M. Abdul Kader, E. Mahmoud “Monitoring and detection the cognition roots using quad copter,” International Journal of Electronics & Information Engineering, vol. 9, no. 2, pp. 81—90, 2018. [2] T. Alam, “Middleware implementation in cloud-MANET mobility model for internet of smart devices,” International Journal of Computer Science and Network Security, vol. 17, no. 5, pp. 86–94, 2017. [3] T. Alam, “Fuzzy control based mobility framework for evaluating mobility models in MANET of smart devices,” ARPN Journal of Engineering and Applied Sciences, vol. 12, no. 15, pp. 4526—4538, 2017. [4] T. Alam, “A reliable framework for communication in internet of smart devices using IEEE 802.15.4,” ARPN Journal of Engineering and Applied Sciences, vol. 13, no. 10, pp. 3378–3387, 2018.. [5] T. Alam, “A reliable communication framework and its use in internet of things (IoT),” International Journal of Scientific Research in Computer Science, Engineering and Information Technology, vol. 3, no. 5, pp. 450–456, 2018. [6] T. Alam, “IoT-Fog: A communication framework using blockchain in the internet of things,” International Journal of Recent Technology and Engineering, Vol. 7, no. 6, 2019.

Electronic copy available at: https://ssrn.com/abstract=3639076

39

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

[7] T. Alam, “Blockchain and its role in the internet of things (IoT),” International Journal of Scientific Research in Computer Science, Engineering and Information Technology, vol. 5, no. 1, pp. 151–157, 2019. [8] T. Alam, “Tactile internet and its contribution in the development of smart cities,” arXiv preprint arXiv: 1906.08554, 2019. [9] T. Alam, “5G-enabled tactile internet for smart cities: Vision, recent developments, and challenges,” Journal Informatika, vol. 13, no. 2, pp. 1—10, July 2019. [10] T. Alam, M. Aljohani, “An approach to secure communication in mobile ad-hoc networks of Android devices,” In International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS’15), pp. 371—375, 2015. [11] T. Alam, M. Aljohani, “Design a new middleware for communication in ad hoc network of android smart devices,” In Proceedings of the ACM Second International Conference on Information and Communication Technology for Competitive Strategies, pp. 38, 2016. [12] T. Alam, M. Benaida, “The role of cloud-MANET framework in the internet of things (IoT),” International Journal of Online Engineering, vol. 14, no. 12, pp. 97–111, 2018. [13] T. Alam, M. Benaida, “CICS: Cloud–internet communication security framework for the internet of smart devices,” International Journal of Interactive Mobile Technologies, vol. 12, no. 6, pp. 74—84, 2018. [14] T. Alam, P. Kumar, and P. Singh, “Searching mobile nodes using modified column mobility model,” International Journal of Computer Science and Mobile Computing, vol. 3, no. 1, pp. 513—518, 2014. [15] T. Alam, A. Mohammed, “Design and implementation of an ad hoc network among android smart devices,” In International Conference on Green Computing and Internet of Things (ICGCIoT’15), pp. 1322–1327, 2015. [16] T. Alam, B. Rababah, “Convergence of MANET in communication among smart devices in IoT,” International Journal of Wireless and Microwave Technologies, vol. 9, no. 2, pp. 1–10, 2019. [17] T. Alam, and B. K. Sharma, “A new optimistic mobility model for mobile ad hoc networks,” International Journal of Computer Applications, vol. 8, no. 3, pp. 1—4, 2010. [18] T. Alam, A. P. Srivastava, S. Gupta, and R. G. Tiwari, “Scanning the node using modified column mobility model,” Computer Vision and Information Technology: Advances and Applications, vol. 455, 2010. [19] M. Aljohani and T. Alam, “Design an m-learning framework for smart learning in ad hoc network of android devices,” in IEEE International Conference on Computational Intelligence and Computing Research (ICCIC’15), pp. 1–5, 2015. [20] M. Aljohani, T. Alam, “An algorithm for accessing traffic database using wireless technologies,” in IEEE International Conference on Computational Intelligence and Computing Research (ICCIC’15), pp. 1-4, 2015. [21] M. Aljohani and T. Alam, “Real time face detection in ad hoc network of android smart devices,” In Advances in Computational Intelligence: Proceedings of International Conference on Computational Intelligence, Springer Singapore, 2017. [22] T. Y. Chen, M. S. Hwang, J. K. Jan, “A secure image authentication scheme for tamper detection and recovery,” The Imaging Science Journal, vol. 60, no. 4, pp.219–233, 2012. [23] S. R. Fanello, J. Valentin, C. Rhemann, A. Kowdle, V. Tankovich, P. D. S. Izadi, “UltraStereo: Efficient learning-based matching for active stereo systems,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2691—2700, 2017. [24] J. C. Junior, S. Jacques, S. R. Musse, and C. R. Jung, “Crowd analysis using computer vision techniques,” IEEE Signal Processing Magazine, vol. 27, no. 5, pp. 66–77, 2010. [25] W. Matusik, C. Buehler, R. Raskar, S. J. Gortler, and L. McMillan, “Image-based visual hulls,” In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 369—374, 2000.

Electronic copy available at: https://ssrn.com/abstract=3639076

40

I.J. of Electronics and Information Engineering, Vol.12, No.1, PP.34-41, Mar. 2020 (DOI: 10.6636/IJEIE.202003 12(1).05)

[26] J. Schubert, L. Ferrara, P. H¨ orling, and J. Walter, “A decision support system for crowd control,” In Proceedings of the 13th International Command and Control Research Technology Symposium, pp. 1—19, 2008. [27] A. Sharma, T. Alam, and D. Srivastava, “Ad hoc network architecture based on mobile IPv6 development,” Advances in Computer Vision and Information Technology, vol. 224, 2008. [28] P. Singh, P. Kumar, and T. Alam, “Generating different mobility scenarios in ad hoc networks,” International Journal of Electronics Communication and Computer Technology, vol. 4, no. 2, pp. 582—591, 2014.

Biography Tanweer Alam is with the Department of computer science, Islamic University of Madinah since 2013. He is awarded by Ph.D. (Computer Science and Engineering), M.Phil. (Computer Science), MTech (Information Technology), MCA (Computer Applications) and M.Sc. (mathematics). His area of research including Mobile Ad Hoc Network (MANET), Smart Objects, Internet of Things, Cloud Computing and wireless networking. He is a single author of twelve books. He is the member of various associations such as International Association of Computer Science and Information Technology (IACSIT), International Association of Engineers, Internet Society (ISOC), etc. Mohameed aljohani is with department of Information Systems, Islamic University of Madinah, Saudi Arabia. He is awarded MS degree in Information System from Cancordia University, Canada.

Electronic copy available at: https://ssrn.com/abstract=3639076

41